Model Autophagy Disorder: AI Will Eat Itself

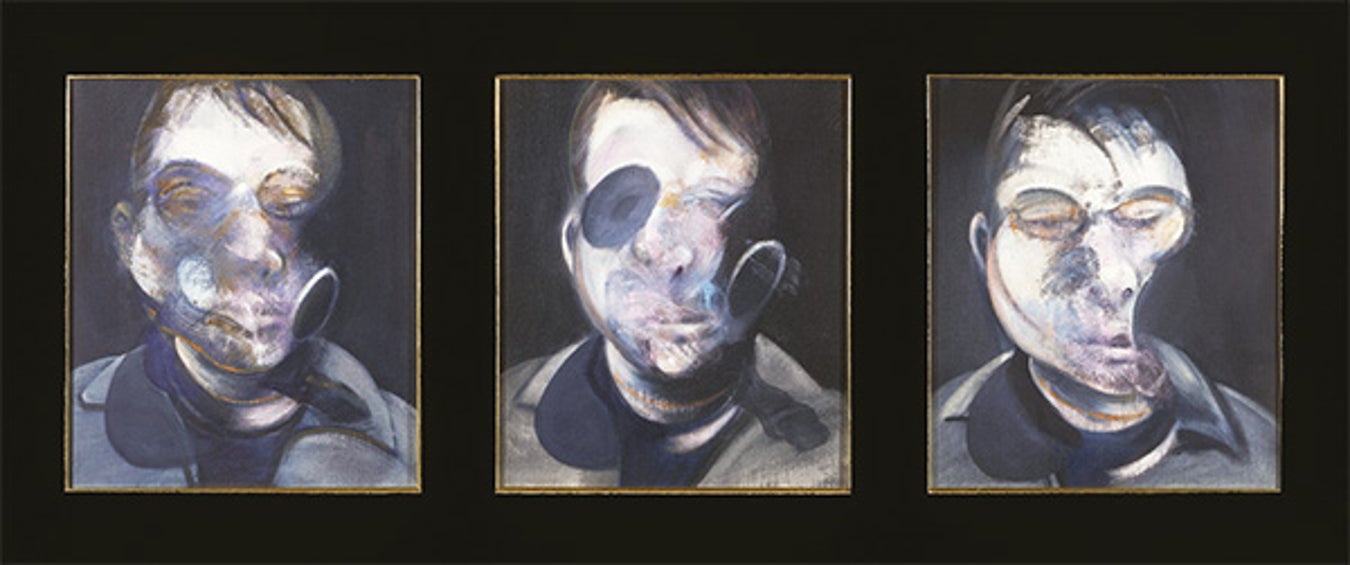

Three Studies for Self-Portrait by Francis Bacon.

Model collapse isn't an AI Habsburg chin, but rather the possibility of AI becoming the McDonald's we secretly crave. While researchers worry about vanishing tail distributions and recursive training loops, the underlying tragedy lies in the fact that we are creating monotonous machines for a market that prioritizes predictability over surprise. The models won't "collapse." They'll converge on exactly the mediocrity we deserve.

AI will eat itself. AI Research groups have documented the phenomenon they call "Model Autophagy Disorder" or "model collapse". The basic mechanics are simple: when AI models generate content, text, images, whatever, and that synthetic content gets fed back into training new models, those new models get progressively worse. After a few generations, they collapse entirely, spitting out gibberish or the same image on repeat.

Model Collapse: The Habsburg Chin of AI

The original paper that coined "model collapse" buried the lede in academic jargon about "tails of the original content distribution disappearing." More plainly stated, this means models trained on their own outputs develop increasingly exaggerated features while losing all the interesting variations.

Picture a newspaper left in the rain. The headlines stay readable the longest, representing those high-probability words that models love to emphasize. But the supporting details, the colorful quotes, and surprising statistics blur and vanish while the larger print stays at least legible. This is model collapse: not a sudden failure but a slow smudging where only the most obvious patterns survive.

The Surprise Metric Nobody's Measuring

When discussing model collapse researchers talk about KL divergence and perplexity ("how surprised would I be if I expected distribution A but got distribution B instead?" and how unlikely a model thinks a sentence is.)

| Metric | Low Value | High Value | Example |

|---|---|---|---|

| KL Divergence | "You speak the same language as I do" | "I don't understand Klingon" | You vs roommate (90% overlap) → Low You vs grandma (different catalogs) → High |

| Perplexity | "Yeah, I'd totally generate that" | "What the hell is this?" | "Weather is nice today" → Low "Weather is fish today" → High |

This reveals the mechanism of model collapse: as models eat their own outputs, they become more confident about fewer things. The perplexity drops on common phrases (good!) but skyrockets on anything unusual (bad!).

For the average person, my collapse metric is "surprise." We aren't frequently surprised by an LLM, but it does happen: those moments when Claude or GPT produces something that makes you pause, reconsider, or maybe even laugh. There's the canary in the coal mine.

When models stop surprising us, they've lost access to the tail distributions where "creativity" lives. The Nature paper showed that minority data disappears first during "early model collapse." But users won't notice missing statistics about rare diseases or edge-case programming patterns. They might notice when every response feels like it came from the same boring template.

Why the Synthetic Data Gold Rush Hasn't Imploded (Yet)

The synthetic data market is booming, generating $400-576M in 2024 with 35-41% growth. If model collapse is real, why isn't this market imploding?

Simple: the milking of this cash cow has just begun. It's too soon for anyone in the software space to be self-reflective. We need some huge blimp crashes first. But more importantly, there are enough niche domains where data can be "faked" and still provide value:

- Credit scoring: Generate thousands of synthetic transactions that follow known patterns

- Healthcare: Create patient records that match disease progressions from textbooks

- Weather: Use physics models to generate plausible, but freakish meteorological data

- Legal documents: Spin variations on standard contracts and agreements

Gerstgrasser's rebuttal showed that mixing real and synthetic data prevents collapse. I would hazard a guess you need about 70% real data as your "vitamin"—enough to keep the tail distributions alive. But that's just intuition. Nobody really knows the minimum dose.

The McDonald's Problem:

Another danger I can think of is that most people will prefer the collapsed models. They're predictable, safe, and "professional." No unpleasant surprises. Like McDonald's, they offer reliability over excellence and familiarity over innovation.

Yes, the market might actively select for model collapse. When TechTarget explained how models lose variance and converge to averages, they missed that many users want exactly that. Corporate communications, customer service, and routine documentation are all domains that reward blandness and homogeneity.

The minority who need tail distributions (researchers hunting rare insights, writers seeking unexpected metaphors, engineers solving edge cases) become collateral damage in the race to the middle.

The Human Collapse We're Ignoring

And maybe we worry about models forgetting while ignoring a darker feedback loop. SMEs increasingly rely on LLMs for their output, their own creative muscles atrophy, they produce more LLM-like content, and future models train on this degraded expert output. The collapse happens in wetware before software.

I predict we'll eventually lose subject matter experts not because AI replaces them, but because their output becomes stultified by too much LLM usage. They'll lose the ability to generate the surprising, tail-distribution insights that made them experts in the first place.

The LLM Sharecroppers

The "solution"? There will be SMEs, professors, and writers who produce content for LLMs full time. The catch: these people won't be able to use LLMs themselves. Like Devansh's investigation suggests, we'll need human "vitamin supplements" to keep our models healthy.

Imagine the job posting: "Seeking writers to produce original content. Must work without AI assistance. Your writing will train next-generation models." It's a kind of digital sharecropping with humans laboring to feed machines that make their skills obsolete.

The Comfortable Catastrophe

Model collapse terrifies researchers because it threatens the exponential improvement narrative. Wikipedia now tracks it as a fundamental ML phenomenon. But what if it's not a bug but a market feature?

Obviously this makes LLMs terrible at handling black swans: financial crashes, novel diseases, and creative breakthroughs. When you've trained out the tail distributions, you've trained out the ability to handle anything truly unexpected. But most days, most people don't need to handle the unexpected. They need to write emails, summarize documents, and generate marketing copy that sounds professional.

Perhaps the thing to be concerned about is not that models will become boring, but that we will prefer them that way. We're sleepwalking into a future in which "good enough" becomes acceptable, and the long tail of human knowledge is traded for the security of the bell curve's peak.

The models won't collapse. They'll just become exactly as boring as we want them to be.